The myth that bigger is better dominates the AI sector. Companies often chase larger AI models. They assume that more parameters lead to better performance. However, Ashvini Kumar Jindal challenges that notion. The AI Engineer & open-source LLM innovator is changing minds with something as simple as a single GPU at home.

Working Inside, Building Outside

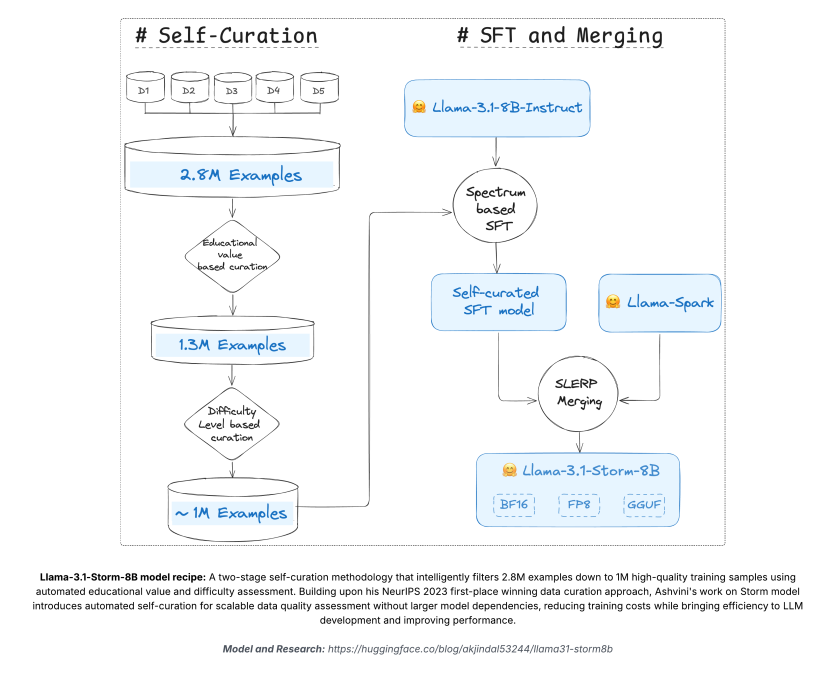

Jindal operates on two fronts. Professionally, he contributes to enterprise-grade LinkedIn AI systems. Privately, he is an independent builder releasing open-source foundational AI models that challenge the status quo. One of his projects, Llama-3.1-Storm-8B, gained widespread recognition within open-source circles for its performance.

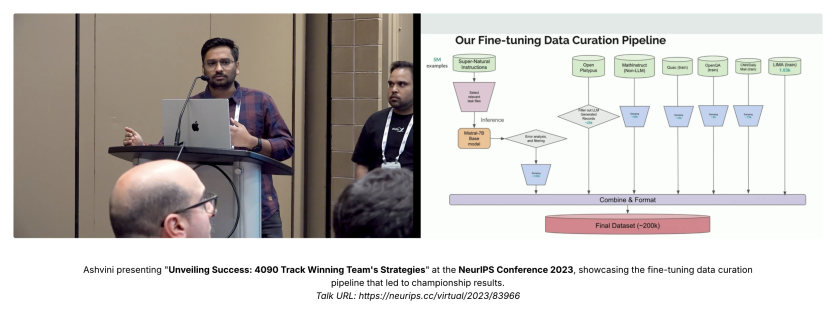

A major lab didn't back it. Jindal didn't rely on cloud credits or large teams. Instead, he leveraged a single, high-performance workstation. That machine powered his victory at the 2023 NeurIPS LLM Efficiency Challenge. The data-centric strategies he employed also contributed to this achievement. The win was a public counterargument to the industry's over-reliance on scale.

Data Is the Differentiator in AI Innovation

For Jindal, data-centric AI is a guiding principle. He believes the fundamental breakthroughs and model performance come from advanced data curation for AI. The tweaks to the architectures aren't enough. While others discuss their parameter counts, he perfects what goes into the model.

His approach to self-curation AI is more disruptive. The AI thought leader rebels against the belief that better AI needs more data. Instead, it needs better data. To achieve high-quality datasets, they must be selected with precision, cleaned with care, and structured with intention.

Let the Work Speak

The industry gets flooded with project presentations. However, Jindal takes a different route to getting his work noticed. Instead, he prefers "prototypes over PowerPoints." The build first, talk later method emphasizes results. This strategy has earned him a growing reputation among engineers and researchers.

The proof-first mindset also helps him tackle problems in large organizations. Take the challenge of introducing a new idea, for instance. Jindal doesn't just explain it. The first thing he does is build a working version. Of course, the prototype may be rough. However, he believes it's more persuasive than a polished slide deck.

Personal Projects with Big Impact

Jindal's foray into AI began with a fascination for how data could unlock intelligence. Originally from India, he followed that curiosity to Silicon Valley. Along the way, he developed a unique point of view about AI. He saw that data is the real moat in AI.

A series of personal projects tested and validated his theories. Additionally, his findings culminated in his NeurIPS win at one of the AI Efficiency Competitions. For Jindal, these efforts aren't just side projects. Instead, they're bold statements attesting that impactful AI doesn't require permission, just initiative.

Rethinking What Progress Looks Like

Jindal's long-term vision doesn't hinge on building the biggest model in the room. Instead, he's focused on efficient LLM development. Additionally, he aims to make AI at scale practical, sustainable, and useful. One particular area of interest is domain-specific models. These compact systems perform well in targeted use cases without requiring massive infrastructure.

Another focus area deals with two-way communication. There are many lessons learned within enterprise LLM environments. The natural language processing expert wants to see this information fed back into the open-source AI models and vice versa. That balance, to him, is essential for the future of AI development.

What You Can Learn from Ashvini Jindal

Breakthroughs don't always come from where you expect. That's a reminder you get from Jindal. You don't need institutional backing or massive compute budgets. What's needed is an eye for data, a willingness to experiment, and the patience to let your results speak.

Interested in Ashvini Kumar Jindal's work? If so, follow him on LinkedIn, Hugging Face, or X/Twitter. But don't stop there. Consider his principles for democratizing AI and AI for professional networks. What are they? Build with purpose, prioritize precision, and don't wait for permission to rethink what's possible.

-

C114 Communication Network

C114 Communication Network -

Communication Home

Communication Home