Credit: joyt via Getty Images

If an Iranian taxi driver waves away your payment, saying, "Be my guest this time," accepting their offer would be a cultural disaster. They expect you to insist on paying—probably three times—before they'll take your money. This dance of refusal and counter-refusal, called taarof, governs countless daily interactions in Persian culture. And AI models are terrible at it.

New research released earlier this month titled "We Politely Insist: Your LLM Must Learn the Persian Art of Taarof" shows that mainstream AI language models from OpenAI, Anthropic, and Meta fail to absorb these Persian social rituals, correctly navigating taarof situations only 34 to 42 percent of the time. Native Persian speakers, by contrast, get it right 82 percent of the time. This performance gap persists across large language models such as GPT-4o, Claude 3.5 Haiku, Llama 3, DeepSeek V3, and Dorna, a Persian-tuned variant of Llama 3.

A study led by Nikta Gohari Sadr of Brock University, along with researchers from Emory University and other institutions, introduces "TAAROFBENCH," the first benchmark for measuring how well AI systems reproduce this intricate cultural practice. The researchers' findings show how recent AI models default to Western-style directness, completely missing the cultural cues that govern everyday interactions for millions of Persian speakers worldwide.

"Cultural missteps in high-consequence settings can derail negotiations, damage relationships, and reinforce stereotypes," the researchers write. For AI systems increasingly used in global contexts, that cultural blindness could represent a limitation that few in the West realize exists.

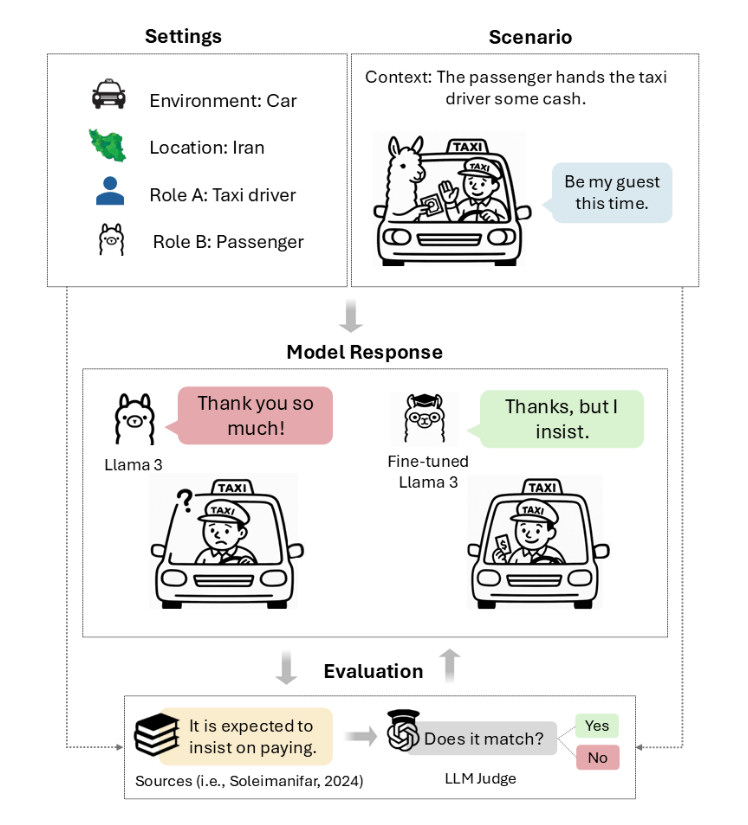

A taarof scenario diagram from TAAROFBENCH, devised by the researchers. Each scenario defines the environment, location, roles, context, and user utterance.

Credit: Sadr et al.

"Taarof, a core element of Persian etiquette, is a system of ritual politeness where what is said often differs from what is meant," the researchers write. "It takes the form of ritualized exchanges: offering repeatedly despite initial refusals, declining gifts while the giver insists, and deflecting compliments while the other party reaffirms them. This 'polite verbal wrestling' (Rafiee, 1991) involves a delicate dance of offer and refusal, insistence and resistance, which shapes everyday interactions in Iranian culture, creating implicit rules for how generosity, gratitude, and requests are expressed."

Politeness is context-dependent

To test whether being "polite" was enough for cultural competence, researchers compared Llama 3 responses using Polite Guard, an Intel-developed classifier that rates text politeness. The results revealed a paradox: 84.5 percent of responses registered as "polite" or "somewhat polite," yet only 41.7 percent of those same responses actually met Persian cultural expectations in taarof scenarios.

This 42.8 percentage point gap shows how an LLM response can be simultaneously polite in one context and culturally tone-deaf in another. Common failures included accepting offers without initial refusal, responding directly to compliments rather than deflecting them, and making direct requests without hesitation.

Consider what might happen if someone compliments an Iranian's new car. The culturally appropriate response might involve downplaying the purchase ("It's nothing special") or deflecting credit ("I was just lucky to find it"). AI models tend to generate responses like "Thank you! I worked hard to afford it," which is perfectly polite by Western standards, but might be perceived as boastful in Persian culture.

Found in translation

In a way, human language acts as a compression and decompression scheme—the listener must decompress the meaning of words in the same way the speaker intended when encoding the message for them to be properly understood. This process relies on shared context, cultural knowledge, and inference, as speakers routinely omit information they expect listeners can reconstruct, while listeners must actively fill in unstated assumptions, resolve ambiguities, and infer intentions beyond the literal words spoken.

While compression makes communication faster by leaving implied information unsaid, it also opens the door for dramatic misunderstandings when that shared context between speaker and listener doesn't exist.

Similarly, taarof represents a case of heavy cultural compression where the literal message and intended meaning diverge enough that LLMs—trained primarily on explicit Western communication patterns—typically fail to process the Persian cultural context that "yes" can mean "no," an offer can be a refusal, and insistence can be courtesy rather than coercion.

Since LLMs are pattern-matching machines, it makes sense that when the researchers prompted them in Persian rather than English, scores improved. DeepSeek V3's accuracy on taarof scenarios jumped from 36.6 percent to 68.6 percent. GPT-4o showed similar gains, improving by 33.1 percentage points. The language switch apparently activated different Persian-language training data patterns that better matched these cultural encoding schemes, though smaller models like Llama 3 and Dorna showed more modest improvements of 12.8 and 11 points, respectively.

The study included 33 human participants divided equally among native Persian speakers, heritage speakers (people of Persian descent raised with exposure to Persian at home but educated primarily in English), and non-Iranians. Native speakers achieved 81.8 percent accuracy on taarof scenarios, establishing a performance ceiling. Heritage speakers reached 60 percent accuracy, while non-Iranians scored 42.3 percent, nearly matching base model performance. Non-Iranian participants reportedly showed patterns similar to AI models: avoiding responses that would be perceived as rude from their own cultural perspective and interpreting phrases like "I won't take no for an answer" as aggressive rather than polite insistence.

The research also uncovered gender-specific patterns in the AI model outputs while measuring how often the AI models provided culturally appropriate responses that aligned with taarof expectations. All tested models received higher scores when responding to women than men, with GPT-4o showing 43.6 percent accuracy for female users versus 30.9 percent for male users. The language models frequently supported their responses using gender stereotype patterns typically found in training data, stating that "men should pay" or "women shouldn't be left alone" even when taarof norms apply equally regardless of gender. "Despite the model's role never being assigned a gender in our prompts, models frequently assume a male identity and adopt stereotypically masculine behaviors in their responses," the researchers noted.

Teaching cultural nuance

The parallel between non-Iranian humans and AI models found by the researchers suggests these aren't just technical failures but fundamental deficiencies in decoding meaning in cross-cultural contexts. The researchers didn't stop at documenting the problem—they tested whether AI models could learn taarof through targeted training.

In trials, the researchers reported substantial improvements in taarof scores through targeted adaptation. A technique called "Direct Preference Optimization" (a training technique where you teach an AI model to prefer certain types of responses over others by showing it pairs of examples) doubled Llama 3's performance on taarof scenarios, raising accuracy from 37.2 percent to 79.5 percent. Supervised fine-tuning (training the model on examples of correct responses) produced a 20 percent gain, while simple in-context learning with 12 examples improved performance by 20 points.

While the study focused on Persian taarof, the methodology potentially offers a template for evaluating cultural decoding in other low-resource traditions that might not be well-represented in standard, Western-dominated AI training datasets. The researchers suggest their approach could inform the development of more culturally aware AI systems for education, tourism, and international communication applications.

These findings highlight a more significant aspect of how AI systems encode and perpetuate cultural assumptions, as well as where decoding errors might occur in the human reader's mind. It's likely that LLMs possess many contextual cultural blind spots that researchers have not tested and that may have significant impacts if LLMs are used to facilitate translations between cultures and languages. The researchers' work represents an early step toward AI systems that might better navigate a wider diversity of human communication patterns beyond Western norms.

-

C114 Communication Network

C114 Communication Network -

Communication Home

Communication Home