Amin Zabardast/Unsplash

The latest Apple-made artificial intelligence model, FastVLM, is now available for users to try out, offering a significantly faster video-captioning AI that can describe what the camera captures on one's device.

Reports claim that the AI model's capabilities and features offer near-instant high-resolution image processing, which makes it one of the most notable technologies available for live captioning.

It was revealed that Apple has been developing the MLX open framework since 2023, and with the reports about the rumored development of Apple smart glasses, it has been speculated that this technology would be available with the wearable.

Apple's FastVLM Video-Captioning AI Is Now Live

Isaiah Richard/TechTimes

Apple released the FastVLM technology several months ago, according to 9to5Mac, and it leverages a Visual Language Model (VLM), which makes use of the MLX or the ML open framework that was specifically designed for Apple Silicon.

In its current iteration, it is 85 times faster for video captioning and is thrice as small as similar models available in the market.

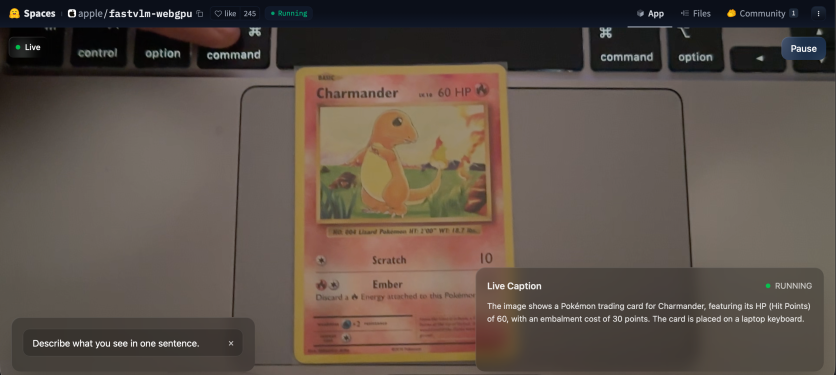

The video-captioning AI can provide information on what the camera has captured or come across, offering near-instant responses, according to the report. However, it would require the image to focus on an object that the user wants to be processed before it can come up with the Live Caption information.

As of press time, it only has several prompts for the AI to follow for the live captioning feature, including commands like "Describe what you see in one sentence," "Identify any text or written content visible," and more.

There's a Catch to Try Apple's FastVLM AI

Users may now try the technology via the uploads Apple shared on the Hugging Face repository, and alternatively, use the lighter web-based version, FastVLM 0.5B.

However, it is important to note that this technology was designed for use on Apple Silicon with its MLX framework. Additionally, 9to5Mac reported that the main version of the AI would take a long time to complete its loading despite already using an M2 Mac with 16GB of memory.

Apple's Smart Glasses and AR Tech

There have been multiple reports that blew up earlier this year about Apple's development of its very own smart glasses, which will feature a daily wearable that will compete with the Ray-Ban Meta.

It was initially claimed that this device would be available come 2026, but further reports claimed that Apple is not yet ready with the technology and is targeting 2027 to debut the wearable alongside the AirPods with cameras.

There are also other wearables that were reported to be in the pipeline for Apple's plans to enter the AR market, including new Vision Air device coming next year. The Vision Air would be the lighter-weight version of the Vision Pro XR headset, which is not recommended for everyday use.

Analyst Ming-Chi Kuo previously claimed that Apple is now turning to the world of wearables as it believes that head-mounted wearables is the next major trend in consumer technology.

-

C114 Communication Network

C114 Communication Network -

Communication Home

Communication Home