Posterum AI

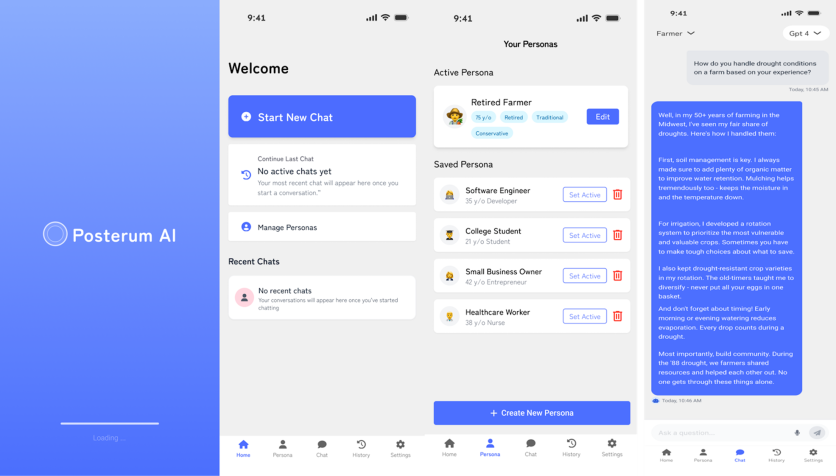

Posterum AI isn't just a chatbot or a glorified search engine. It is a platform for using and studying artificial intelligence with more personalization, depth, and control than typical AI apps. Most AI search tools and search engines collect data on users without their explicit consent. The companies behind the tools then store and control your data. Posterum AI does not. It hands you the controls. While Google and others build invisible profiles from your clicks and browser history, Posterum stores everything on your device. Nothing leaves your phone. No browsing history is logged. No data feeds back to servers. The app allows you to create a detailed personal profile, including your political views, income, family structure, and location, and uses this context to guide responses from ChatGPT, Claude, Gemini, and DeepSeek. The difference is stark. Instead of guessing who you are, the software listens to what you say and responds accordingly.

The October 2025 global release marks a turning point. After months of quiet beta testing, Posterum has demonstrated that personalization can be achieved without compromising privacy through surveillance. Early users report answers that feel less like outputs and more like a conversation. The app does not track behavior. It responds to the user-disclosed identity and information.

A Rebellion Against Extraction

Posterum's privacy-first design stands in direct opposition to the dominant business model of Big Tech. Google processes billions of searches daily, each one feeding an advertising machine built on behavioral prediction. Bing follows a similar path. Both platforms personalize data through observation, building shadow profiles that users are often unaware of and rarely have control over. Posterum rejects that model entirely.

Every profile stays local. Users decide what to share. The app never sends personal data to the cloud. When you ask a question, the software shapes the reply using only the context you provided, nothing more. A civil servant in Berlin, skeptical of corporate influence, adjusts her profile to reflect caution. Queries about data policy return answers that question enforcement, not just summarize the law. Another user in Nairobi, who manages gig work and caregiving, receives budgeting strategies that acknowledge cash flow gaps, rather than idealized savings plans.

Measuring What Matters

The Human-AI Variance Score, as detailed in research by Jack Felix, Posterums's founder, quantifies how closely AI responses mimic those of real humans. The study gathered responses from professional surveys on topics such as financial stress, ethical dilemmas, and policy choices. Corresponding profiles were then used to prompt leading AI models with identical questions. Replies were scored for how closely they matched the human answers from the surveys along demographic variables.

Using Posterum AI shows a clear pattern. Standard AI performs well on logic but falters on context. A model might describe debt repayment with textbook clarity, but overlook the fatigue experienced by someone working two jobs. When guided by a user-defined profile, however, AI responses scored higher on resemblance to human judgment, especially in areas involving contradiction, moral tension, or lived hardship. Using user-defined profiles resulted in significant clarity of context and, importantly, bias-mitigation.

Posterum's research methodology is rigorous. Profiles encompassed urban and rural users, individuals with varied income levels, and diverse political leanings. The findings confirm something rare: the HAVS score and the Posterum AI app are built not for investors, but for users. They ask not how fast or comprehensive the AI is, but how relevant it feels.

Trust Through Transparency

The app's design reflects a broader philosophy. Privacy is not a feature. It is the foundation. Posterum's refusal to harvest data is not a marketing angle. It is a technical decision baked into the architecture. No centralized database. No analytics. No third-party access. When you shape your profile, you own it completely.

Early users describe the shift as tangible. Replies slow. They qualify. They acknowledge ambiguity. A user in London noted that answers felt less transactional, more like advice from someone who knew her circumstances. Another in Hong Kong remarked that the app did not just respond. It adjusted.

Posterum claims to have an obligation to protect user identity and respect each individual's context. That's how it builds trust, not just accuracy. However, once it has established trust, it can improve accuracy because it has access to relevant data. Future releases will expand profile options to include mental health, humor, and cultural identity. But the core promise remains. Posterum AI does not track you. It does not infer. It listens to you.

The standout app of 2025 is not the fastest or the largest. It is the one that stopped pretending to know you and started asking you for the right information so it can give you the best results. It may seem like a simple idea, but we have yet to see another app like it.

-

C114 Communication Network

C114 Communication Network -

Communication Home

Communication Home