Brain–computer interfaces are beginning to truly "understand" Chinese.

The INSIDE Institute for NeuroAI, in collaboration with Huashan Hospital affiliated with Fudan University, the National Center for Neurological Diseases, and multiple research institutes and hospitals, has achieved a major breakthrough in implantable brain–computer interfaces.

Using a neural signal foundation model developed in-house, the team successfully decoded Chinese language from brain signals with high accuracy and strong generalization, opening a new clinical pathway for restoring language function in patients with speech and language impairments.

The research was validated using clinically approved implantable BCI devices in real medical settings, marking a critical transition for Chinese-language BCIs—from laboratory demonstrations to clinical usability.

This breakthrough means that patients with conditions such as ALS or post-stroke aphasia may one day be able to "speak" again through implanted brain–computer interfaces, enabling complete, everyday semantic expression.

INSIDE Brain

Key Breakthrough: Decoding the Neural "Pronunciation Code" of Chinese

Neural Input: Real Brain Signals from Multiple Regions and Depths

The study is based on stereoelectroencephalography (SEEG) electrodes implanted by Huashan Hospital's neurosurgical team under clinically approved conditions.

Using these electrodes, researchers were able to:

- Collect high-throughput neural signals from multiple brain regions and depths

- Capture the fine-grained neural dynamics involved in real language production

These signals served as the raw input for the Neural Signal Foundation Model™.

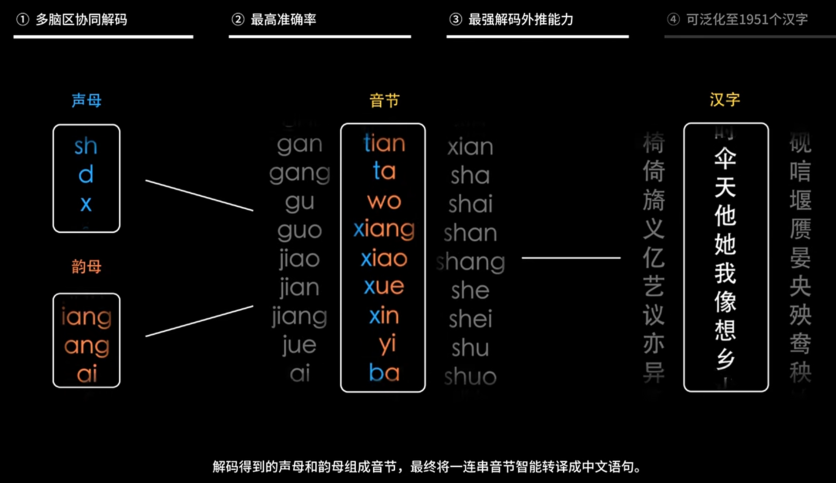

Decoding Architecture: Rebuilding Language from Its Smallest Units

Building on its proprietary Neural Signal Foundation Model™, the research team designed a four-level decoding framework aligned with the structure of spoken Chinese:

Initial consonants / final vowels → syllables → characters → sentences

This is not a simple character prediction. Instead, the system reconstructs full semantic expression step by step, starting from the most fundamental phonetic units of Chinese. Crucially, this approach achieves precise Chinese language decoding using clinically viable electrode configurations.

//

Why Is Chinese Language Decoding So Difficult?

- Chinese is a highly compressed language with strong ambiguity

- 418 syllables × 4 tones means the same phonetic form can correspond to entirely different characters and meanings depending on context and tone

As a result, the brain's signals must not only be detected but accurately distinguished, correctly combined, and continuously interpreted.

It is within this high-complexity linguistic system that the INSID's Neural Signal Foundation Model™ achieved its breakthrough.

//

Real-Time Output: Full Chinese Sentences Generated in Under Half a Second

According to Professor Li Meng, Chief Scientist at INSIDE Brain Institute for NeuroAI:

"We can decode a complete Chinese sentence within 0.5 seconds, with no limit on sentence length. More importantly, we have broken through a long-standing accuracy bottleneck—achieving over 83% accuracy for 10 initial consonants and over 84% accuracy for 15 final vowels."

These results indicate that Chinese neural decoding is not only feasible, but fast and accurate enough for real communication.

A Breakthrough in Language Decoding Strategy

This research achieves leading performance across multiple key dimensions:

- Full neural coverage: Multi-region, depth-resolved SEEG signals are jointly decoded to comprehensively capture language-related brain activity.

- High-accuracy recognition: Industry-leading accuracy exceeding 83% for initials and 84% for finals.

- Strong generalization: With a 1:36 generalization ratio, the system requires only 100 minutes of training data and 54 characters to scale to nearly 2,000 commonly used Chinese characters.

- Large-scale decoding: Efficient decoding across 1,951 commonly used characters.

- Real-time performance: Per-sentence inference time under 0.5 seconds, supporting real-time generation of sentences of unlimited length.

Importantly, because the framework is built on precise decoding of fundamental phonetic units, it is not limited to Chinese and has the potential to extend to other language systems.

Clinical Relevance: Language Restoration

The core value of this technology lies in its immediate clinical relevance:

- Chinese language decoding achieved using clinically standard implantable electrodes

- Generalization strong enough to support continuous, complete everyday communication

Multiple clinical cases at Huashan Hospital have already validated the system's performance.

This milestone places China at the international forefront of BCI-based language decoding—particularly for Chinese, one of the world's most complex language systems—and offers renewed hope to patients who have lost the ability to communicate.

Neural Signal Foundation Model™: The Long-Term Infrastructure Behind Next-Generation Brain–Computer Interfaces

The breakthrough behind this work is rooted in a long-term, system-level approach to brain–computer interface development.

Through sustained collaboration with leading research institutes and top neurosurgical hospitals, and by accumulating large-scale, high-quality EEG data, INSIDE Institute for NeuroAI has built a designed to support BCI development over the long run.

Professor Li Meng has described the Neural Signal Foundation Model™ as "the ChatGPT of brain–computer interfaces." The comparison reflects three core capabilities:

1. Temporal Generalization — Reliable Over Time

The model maintains stable decoding performance across extended periods, addressing a long-standing challenge in traditional BCIs, where accuracy often degrades with continued use. As a result, users do not need to repeatedly recalibrate the system, enabling more reliable long-term operation.

2. Cross-Individual Generalization — Plug-and-Play Use

A single model can rapidly adapt to new users, reducing the lengthy, user-specific calibration process to just minutes. This enables a "plug-and-play" experience, allowing first-time users to get started quickly without extensive setup.

3. Task Generalization — One Model, Multiple Functions

With minimal fine-tuning, the same foundational model can support multiple tasks, overcoming the traditional limitation of building a separate model for each function.

Together, these three forms of generalization establish the Neural Signal Foundation Model™ as a stable, scalable, and efficient intelligence backbone, fundamentally advancing brain–computer interfaces from customized, single-purpose systems toward general-purpose platforms.

The long-term impact of this shift is now beginning to take shape.

Looking Ahead: Toward Seamless Communication Between Brain and World

Professor Li Meng states:

"Moving forward, iBrain will continue to refine its Neural Signal Foundation Model™ and develop its own BCI systems, enabling people who have lost speech due to illness or neurological injury to overcome physical limitations and communicate freely. Beyond that, we aim to further decode human intentions and mental states, ultimately enabling seamless communication between the brain and the external world."

This is not just a technological advance—it represents the opening of a new pathway for human communication.

https://www.insidebrain.com/ for more information.

-

C114 Communication Network

C114 Communication Network -

Communication Home

Communication Home