The surge in generative AI tools has given creators more options than ever, but it has also exposed a major gap: integration. Video, image, sound, and language generation have all evolved rapidly—yet most systems still operate in isolation. For creative professionals, the real challenge isn't producing content, but connecting all these capabilities in one consistent workflow.

Now to SkyReels, a newly launched AI video creation platform that approaches the problem from a systems perspective rather than a single-feature focus. With its V3 model, SkyReels combines multiple state-of-the-art models under one multimodal framework, offering a new level of coherence between visual, auditory, and narrative generation. The company describes it as "the world's first zero-barrier creative engine."

SkyReels

The Architecture Behind SkyReels V3

At the technical core of the platform is a Multi-modal In-Context Learning (ICL) framework, designed to process and cross-reference data between different input types—text, image, audio, and video—in real time. Unlike traditional pipelines that handle each medium separately, SkyReels' system learns relational patterns between modalities, allowing for synchronized generation and editing.

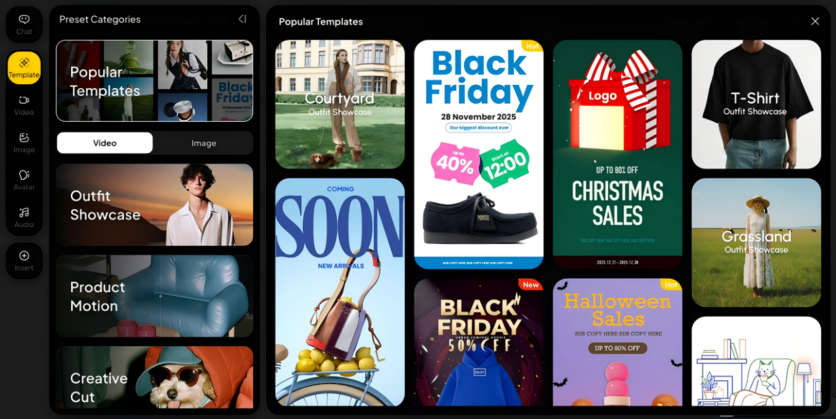

This architecture powers the platform's Limitless Canvas, an interface that serves as both workspace and engine. Users can drag, drop, or prompt directly in natural language, and the system dynamically adapts across modalities. Behind the scenes, SkyReels orchestrates multiple foundation models, including Google VEO 3.1, Sora 2, Runway, Nano Banana, GPT Image, and Seedream 4.0. The models aren't just embedded individually; they operate within a unified pre-trained framework that maintains temporal consistency and semantic coherence across outputs.

The Agentic Layer: Turning Models into Colleagues

Where SkyReels diverges most sharply from competitors is in how it structures intelligence within the platform. Its Agentic Copilot introduces a layered AI system made up of a Super Agent and 28 Expert Agents, each optimized for specific creative or operational roles.

The Super Agent functions as the interpreter, converting user intent into actionable workflows that can involve one or multiple Expert Agents. For example, a prompt such as "Create a 15-second ad for a skincare brand with a digital spokesperson and upbeat background music" triggers a sequence involving agents for scriptwriting, visual composition, avatar generation, and soundtrack design.

SkyReels

Each agent can process multimodal inputs—including text, images, and reference videos—and outputs are refined collaboratively within the shared framework. The result is not just faster generation, but a more structured form of human-AI collaboration that mirrors the dynamics of a creative team. Here are the demos showing how it works:

https://www.youtube.com/@SkyReels_AI/featured

Extending the Video Language

SkyReels' V3 model also includes Video Extension and Stylization engines, both of which use predictive modeling to maintain narrative flow. The extension system can generate next-shot predictions using contextual semantics, enabling complex transitions such as reverse shots, cut-ins, and multi-angle sequences.

The Stylization engine, meanwhile, applies detailed visual transformations while preserving object geometry and motion continuity. Instead of the frame-by-frame filtering used by most consumer tools, SkyReels' approach uses time-aware embeddings to maintain consistent lighting and structure. Supported styles range from realism and papercut animation to LEGO, Van Gogh, and cinematic color grading—offering creators a spectrum of artistic control without degradation in quality.

SkyReels

Applications Across Industries

While the interface has the accessibility of a creator app, SkyReels' underlying system is designed for scalability. The combination of multi-agent orchestration and multimodal ICL makes it adaptable across industries.

- Marketing and E-commerce: Generate and localize campaign assets at scale while maintaining brand consistency.

- Education and Training: Build digital-human instructors capable of multi-turn conversation in a single frame.

- Entertainment: Prototype scenes, storyboards, or cinematic concepts without production overhead.

- Enterprise Communication: Turn data and narratives into visual presentations within minutes.

These use cases point toward a broader transition in how AI is being implemented: from generating isolated content to powering creative infrastructurethat integrates multiple modalities and workflows.

A Framework for the Zero-Barrier Era

SkyReels' ambition is to remove what it calls the "computational literacy barrier." By embedding advanced multimodal systems inside an intuitive front end, the company hopes to make AI creation as accessible as typing a prompt or moving a slider.

It's an approach that treats design and engineering as parts of the same system—one focused on clarity, speed, and scalability. If earlier generations of creative software digitized production, SkyReels aims to intelligently network it, connecting tools, agents, and creators in one continuous environment.

"Every generation of technology lowers the barrier to creation," SkyReels says. "This one removes it completely."

As the AI ecosystem shifts from isolated models to orchestrated platforms, SkyReels offers a glimpse of what that next step could look like—a system where imagination, computation, and collaboration finally operate on the same canvas.

Explore the SkyReels platform for yourself at www.skyreels.ai.

-

C114 Communication Network

C114 Communication Network -

Communication Home

Communication Home